I actually attempted to build my own Linux distro from scratch waaaaaaaaay back in the early 2000’s when I got out of college and started working for IBM. I just couldn’t get it to work. Maybe it was because I was younger and impatient or maybe I just didn’t know as much as I thought I did, but either way, I never finished it and has been one of those elusive things that I wished I did much like getting my CISSP.

Now that I have my CISSP cert, I figured I’d give this another go and see if I can finally conquer the challenge I set for myself almost 20 years ago.

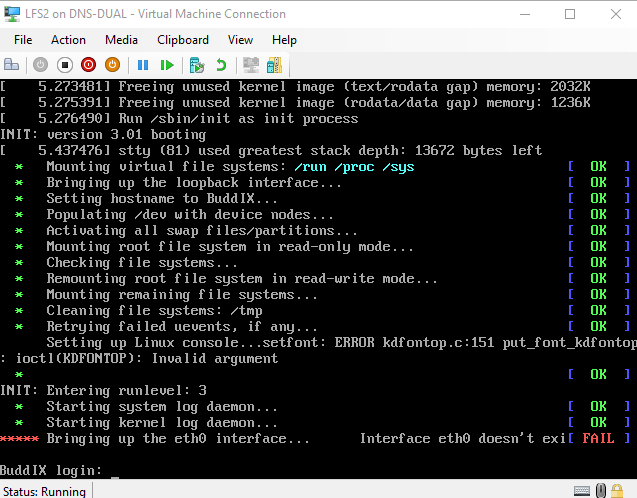

(Yes I know eth0 doesn’t exist. I need to figure out the right drivers for this Virtual Machine)

The Host

To build LFS I’ll need a host OS to build all the toolchain and initial environment. The entire process is very much like cross-compiling software for a different architecture except not.

LFS doesn’t list any recommended distros and while I’m partial to Debian as I’ve been running my servers with it for quite literally decades now, I decided to give a different more modern distro a go simply because I wanted to make sure that all the libraries were new enough to meet or exceed the LFS requirements. After looking around for a bit, I found a great guide from Kernotex on youtube who was running EndeavourOS so I figured I’d give it a go.

The idea was to use a EndeavourOS’s pre-installation live environment as my temporary host on a Hyper-V VM. Normally I use Virtual Box but I figured I might as well give Hyper-V a go.

I ran into some minor hiccups but eventually got it to work. The fixes are outlined in my post here: https://www.buddroyce.com/?p=687

Hyper-V VM Settings were:

- Generation 2 VM (I totally should have used Generation 1, will cover why later in this post)

- 6 Cores assigned (I originally had 24 cores assigned but it didn’t work that well. Will cover it later in this post)

- 8gb-16gb of RAM

- 50gb of HDD space

- TURN SECURE BOOT OFF!! (otherwise EndeavourOS wouldn’t boot)

Why did I use EndeavourOS? Not going to lie, I was just following the Kernotex video on youtube but even though he was building LFS 10.1 and I was building 11.1, EndeavourOS had all the up to date binaries needed with next to no additional software required, which is awesome.

Unlike my attempt at trying to build Unikernels with Unikraft, getting things up and running for my LFS build was waaay smoother.

The Build

I know for years whenever I looked at doing a LFS build, I always ran into problems. Usually versioning and dependency problems that I just didn’t want to sort out or just difference between what was in the LFS book and what I was actually getting.

Thankfully LFS 11.1 came out pretty recently so a lot of the software versions from the book were still valid and most problems were addressed in the book. Between the book and Kernotex’s video, I didn’t find this build all that daunting.

Overall the whole process could have been done over a weekend but I made some screw ups that caused me to back track a little which I’ll cover.

Issues

Initially I ran into problems booting into a Linux host OS. EndeavourOS didn’t want to work and the Ubuntu pre-installation environment didn’t want to work either but those aren’t build specific issues. My build specific issues were

- Errors Compiling & Testing

- Cleaning too many things up

- Not enough system ram

- Unable to get LFS to boot at all

Issue 1: Errors Compiling

Originally I had the VM using the Hyper-V quick create which automatically defaulted to using 24cores and whatever ram I had.

I set the MAKEFLAG=”-j24″ as an environment variable to make full use of the 24cores but for some strange reason some of my code compiles started spitting out errors.

Reducing it down to 20 cores seem to help but I had to bring it down to 4-6 cores for code to finally compile without any errors.

I don’t know what the cause was but it’s likely to do with the code not being very parallel compile friendly. Concurrency is always an issue and it’s likely that with 24 cores, some code was done compiling but errored out when other bits of code that normally gets done in a 1-4 core system doesn’t exist.

If you run into similar problems. Try reducing the number of assigned cores.

make -j4 will assign 4 cores while make -j1 will assign just a single core to a compile job and there were definitely instances where I had to drop down to a single core to compile and test properly.

Issue 2: Cleaning up too many things

I seriously blame myself for being impatient and just copying and pasting stuff here.

During my first run through on my 3rd day of building LFS. I finished installing all the binaries and reached chapter 8.77 (Stripping). For some stupid reason I decided that it was a good idea to do the optional stripping of debugging symbols from binaries and libraries to save space. Little did I know that that would screw things up for me two chapters later when I tried to build the Linux Kernel.

Ended up redoing the whole thing again and learning from my mistakes. If it’s optional, it’s probably best not to do it until AFTER I get a couple of successful builds in.

Issue 3: Not enough system RAM

I should clarify that the VM has more than enough ram. My system has 64gb of ram but 32gb of it is allocated as a ram cache for my 3 drives. That meant that I had 32gb of ram left which was more than enough… until I try to do something else while code is compiling.

I found that while I could watch youtube videos while I had the VM running, I couldn’t do much else. Firing up any other video games on the host windows machine would cause things to really lag.

It turned out that the VM and my ram caching solution were conflicting a bit. Reducing my ram cache down from 32gb to 16gb solved the issue.

I have an additional 64gb of ram on order to bring my machine to 128gb of ram to fully avoid this even with several VMs running in the background.

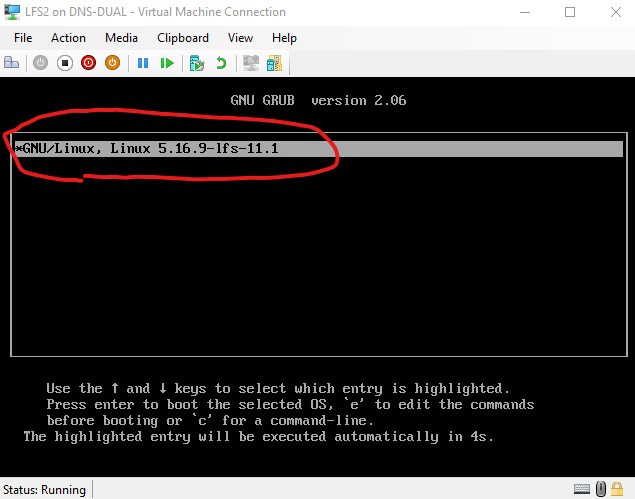

Issue 4: Unable to get LFS to boot at all

This literally took me a day or two to figure out. After getting everything all reinstalled and building out my kernel, I was ready to reboot the system and all I got was… nothing.

It turns out that the base LFS build was meant for legacy systems and not systems running UEFI.

The Hyper-V VM I was using defaulted to generation 2 which meant it supports UEFI and not a legacy bios. The thing is.. once you create a VM and set it’s generation type, you can’t change it again, so I thought I was shit outta luck… until…. I decided to create a new VM using a generation 1 setting and using the existing vhdx hard drive file.

Since the .vhdx file is literally just a disk image, I figured I could use that the same way I could a real hard drive. If everything was physical, I would have just taken the hard drive with LFS on it and shoved it into a system with a legacy bios.

To my surprise, it actually booted.